April 12, 2009

Operational losses, much more than a tail only!

- Image via Wikipedia

Introduction.

This article is about operational losses and how insight in these losses can be extremely valuable for any organization. It describes a best practice approach for the implementation of loss recording and reporting. It will not dive into the modelling of operational risk, because for that subject there is plenty of information available. For those less familiar with operational risk management, an operational loss represents (potential) financial or reputation damage as a result from a failing human, system, process or from external threats. Some examples for a bank:

bank robbery

delayed or not executed transactions

advice inconsistent with customers risk profile

documentation lost, incomplete contract

fraud

Background.

Most financial institutions collect operational loss data to model extreme operational risk events. These events are usually found in the tails as calculated by means of Monte Carlo simulations. The effort of collecting data and model operational risk is often driven by the goal to become Basel II compliant. The more advanced the operational risk program of a company is, the less regulatory capital needs to be set aside as a buffer against extreme events. The operational risk management requirements to become Basel II compliant are set by the BIS and require approval from the regulator. The objective of the Basel II accord is to stimulate development of advanced risk management methods. Banks have the economical need to keep the regulatory capital as low as possible and at the same time need to prove to investors and rating agencies they have robust risk management practices in place.

Although the focus on the extreme event or the reduction of capital is understandable, the side effect is also that an organization often forgets to fully leverage the power of insight in losses. This insight really can boost risk awareness and the self learning capacity of an organization and at the same time dramatically reduce costs resulting from failures.

Loss management for business as usual.

Who needs to record losses?

When setting up loss registration you first have to consider what type of organization you need to facilitate. There is a difference between an organization that offers low frequency, high value and tailor made financial products or an organization that offers high frequency, low value standardized and highly automated services.

In the first case you can assign the responsibility to register the losses to the ORM unit simply because the frequency is low and most losses will have a considerable impact and will require detailed and immediate analysis. In the latter the number of losses can be considerable and probably recurring. In this situation it is best to make the operational business units responsible for the registration of losses in the loss database. When you have to build up your loss management from ground up, there is one important other consideration. A big part of the information you need probably is already in your complaints management system. Consider extending your complaints management system with an interface to your operational risk management system to avoid manual duplication of readily available information.

Now there are different approaches in use to distinguish losses from other financial transactions. Some banks use the centralized approach to detect losses by validating general ledger entries to see if some need to be flagged as operational losses. I think this method has only one major advantage and many drawbacks. It usually requires limited effort to implement this, but has the drawback that it is very likely that many operational losses will remain undetected and information is incomplete. In other words, the advancement of insight in your organizations operational risk will remain limited.

The decentralized detection and recording approach demands much more effort from the ORM unit. It requires that almost every departments needs to understand what operational losses are and how to record them. However once it is in place you’re in business. The simple fact that the business is recognizing and recording operational losses is the best starting point to improve and sustain risk awareness and management at operational level. By ensuring you can aggregate these losses you will be able to present an operational risk heat map for your organization and manage operational risk at tactical (product) and strategic (company) levels. It is advised to combine the recording of losses with the financial booking of the loss. This way you add an additional quality and integrity layer to your loss recording process simply because financial accounting controls are put in place. It will help you to avoid discussions about the correctness of the loss data and also who is responsible for this correctness.

Now what about legal claims or potential regulator fines? These need to be recorded in your loss management system before a financial transaction, if any, will occur. Once it is clear if there will be any financial impact simply follow the regular procedure and make sure no double recordings can happen.

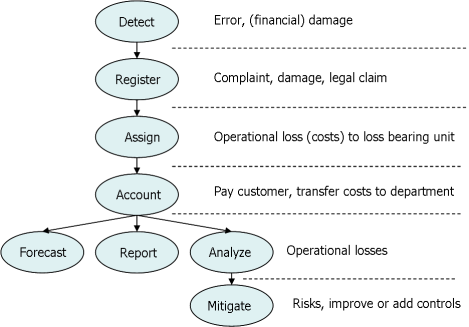

In all cases it should always remain the responsibility of the business, not the ORM unit, to record all losses timely, completely and correctly. Below you find a high level loss management process flow. I would like to put some emphasis on the third step. The assignment of the loss should be tied to acceptance by the loss bearing unit of the loss. This confirmation that the responsibility for the loss is accepted adds to the quality of information as well as to a sustained risk awareness at operational levels in your organization.

Who needs to pay the loss?

Losses should be accounted for per business line as prescribed by the BIS. The losses are input for capital calculations and subsequently capital is assigned to profit centres. However, you also want to make visible who is responsible for the losses that occur. In general it is best to assign the losses to the department that is causing them. Now the occurrence of some operational losses are acceptable as part of the design of a product or process. For instance losses that result from skimming a debit card carrying information on a magnetic stripe. These losses should be assigned to the product owner. Other losses result from wrongful execution of procedures. These losses should be assigned to the department that caused the non-adherence to the procedure. Sometimes losses are caused by service providers. It’s is best to assign these losses to the department who owns the contract with that service provider.

No matter what, when customers have to be paid it speaks for itself that the loss management process should never delay the payment.

What needs to be recorded?

All operational losses above a certain threshold need to be recorded. Although one can decide to minimize the administrative effort by increasing the threshold, it is better to start with a low threshold and optimize the threshold after a few years of experience. Working for a retail organization it is my experience that a threshold of 1000 Euro works well and I think this is a sensible threshold for any organization. The idea behind this is that most errors with a relatively low financial impact can also result in incidents with a high financial impact.

For modelling and benchmarking purposes an organization can become member from ORX or ORIC. These organizations work with thresholds of 20.000 dollar and £ 10.000 , so the threshold of 1000 Euro, pound of dollar will fit. When setting a loss threshold the thresholds from ORX or ORIC should not be considered as a guideline for an organization because the purpose is totally different as I will show later.

Now what attributes does a loss record have? You should take the minimal requirements from BIS and ORX or ORIC. I’ve outlined these requirements here, but I do not promise that they remain up to date.. Just check with ORX or ORIC.

• Reference ID number (Member generated)

• Business Line (Level 2) Code

• Event Category (Level 2) Code

• Country (ISO Code)

• Date of Occurrence

• Date of Discovery

• Date of Recognition

• Credit-related

• Gross Loss Amount

• Direct Recovery

• Indirect Recovery

• Related event Ref ID

This list is an excellent example to demonstrate why loss management has different purposes. When loss management is limited to these attributes it can only be used for benchmarking. When you want to use the information for internal analysis to reduce losses or review controls there is a variety of attributes missing. For internal analysis and reporting you also need to record:

Type of loss i.e. operational loss, operational loss component of a credit loss, (legal) claim.

A descriptive text outlining what has happened and why.

Which department needs to take this loss in its books.

Which department did cause this loss (more explanation later on).

Loss event category i.e. what event occurred (very easy to make fundamental mistakes here).

Loss cause category i.e. what caused the event that resulted in the loss.

Product or service like mortgages, credit card, loan etc.(usually requires more hierarchies).

Now this all sounds pretty straightforward, but there are generic issues that probably require some additional effort to ensure alignment with other supporting processes. It is recommended to align your product and services list with financial reporting and complaints management. This will allow you to incorporate more easily operational losses in your cost reporting and correlate operational losses with customer complaints. Furthermore you are advised to ensure for reporting purposes you will be able to aggregate losses at each hierarchical level in your organization and aggregate at product and product group level.

When you intend to apply for Basel II compliance it is mandatory to use standard loss categories. Unfortunately when you limit the loss recording to those categories your loss analysis and reporting capabilities will be severely impacted. The problem with these categories is that they are often to vague and when used in aggregated loss reporting do not allow a manager to set priority or take action. A potential solution is to connect these mandatory categories to your business defined categories, but not show them in the loss management process.

When do losses need to be recorded?

Losses should be recorded (and booked) within a short period after detection. The larger the loss the more difficult this will be, but a limit of 20 days should be do-able. For large (potential) losses you can decide to design a same-day emergency reporting process to inform senior management more quickly.

For operational losses that are part of a credit loss there is a difficulty. Most of these credit loss components, with the exception of fraud, usually emerge at the moment when a loan defaults. The best approach is to record at the earliest possible time i.e. just after detection.

Where do losses need to be recorded?

Preferably losses are recorded in a central loss database and off course in the general ledger. This way it is easy to report and to get central oversight of operational losses trends. Centralized storage is also beneficial for operational risk modelling, forecasting and Basel II compliance. It also allows you to analyze losses and determine where the concentrations of losses can be found.

What quality controls could be implemented?

One important control is a frequent sign off by management stating the full coverage, completeness and correctness of recorded losses. A review of the ORM department from the correct recording also should be considered, preferably combined with the analysis of the important losses. Ensuring the quality is important for modeling, reporting and analysis. Combining this review with the reconciliation of the loss database against the general ledger also adds to the quality and the trustworthiness of the information.

What to do with the loss information?

The added value of the operational loss information is already outlined in various places in this overview. One of the most important things you should do with losses is ensuring frequent (monthly) reporting at all levels of your organization. This way you enable management to develop risk awareness and manage actively operational risks or failing controls. You could also use loss information to create a set of Key Risk Indicators (KRI) or as input for a risk heat map.

Usually loss recording is required to model operational risk as part of a Basel II compliance application package. Using losses to measure the quality of execution in your organization by incorporating loss thresholds in performance contracts is taking it one step further. Losses are backward looking, but by analyzing losses in detail you can also develop operational risk indicators. Indicators that signal an increased level of risk which require management attention to avoid increase of losses. Loss information can also be used to review your insurance strategy.

I’ve outlined many examples to take advantage of operational loss information in a business as usual environment. But the best example is given by this citation of a senior manager from a large bank. Immediately after assuming a new position he asked for two things: “Show me the losses and show me the audit findings so I can see whether or not we are in control”.

Summarizing Key success factors for operational loss management.

- implement loss detection and registration process at the lowest level in your organization

- combine this process with the financial accounting of the operational loss

- provide clear guidance on how to allocate the loss

- make sure event categories are aligned with the business processes

- report monthly at operational, product and hierarchical levels. Report concentrations, trends and forecasts.

- incorporate loss frequency in the performance contracts

Summarizing key benefits of operational loss management.

- reduced regulatory capital

- reduction of costs

- sustained risk awareness at all levels in your organization

- transformation of incident driven management into risk/reward management

- improved insight in performance of recovery departments (legal, security, etc)

Conclusions.

This article outlined the added value of an operational loss management process in a business as usual context. The process will improve the learning capability of an organization. It also can be a very effective instrument to reduce costs. This practice has been developed for financial institutions, but should be considered for any medium to large organization.

A careful implementation of the process is required to leverage the potential to the full. It will require backing from senior management with the ambition to improve transparency. Following mandatory guidelines from BIS and regulators is as important as alignment with day-to-day business processes.

March 10, 2009

Solvency II, dealing with Operational Risk

- Image via Wikipedia

About the authors

Dr. ing. Jürgen H.M. van Grinsven is director of Deloitte Enterprise Risk Services and author of the books Improving Operational Risk Management and Risk Management in Financial Institutions.

Drs. Remco Bloemkolk works at ING corporate risk management and has written this article in his personal capacity. Prior to ING he worked at Swiss Re and Ernst & Young.

Introduction.

Historically, insurers have focused on understanding and managing investment and underwriting risk. However, recent developments in operational risk management, guidelines by the rating agencies and the forthcoming Solvency II regime increase insurers’ focus on operational risk. Insurers consequently have to decide on their approach to managing operational risk.

The Solvency II framework consists of three pillars, each covering a different aspect of the economic risks facing insurers, see figure 1. This three-pillar approach aims to align risk measurement and risk management. The first pillar relates to the quantitative requirement for insurers to understand the nature of their risk exposure. As such, insurers need to hold sufficient regulatory capital to ensure that (with a 99.5% probability over a one-year period) they are protected against adverse events. The second pillar deals with the qualitative aspects and sets out requirements for the governance and risk management of insurers. The third pillar focuses on disclosure and transparency requirements by seeking to harmonise reporting and provide insight into insurers’ risk and return profiles.

Solvency II (SII) is the updated set of regulatory requirements for insurance companies operating in the European Union. It revises the existing capital adequacy regime and is expected to come into force in 2012. It has a number of expected benefits, both for insurers and consumers. Although the most obvious benefit seems to be preventing catastrophic losses, other less obvious benefits which are considered to be important are summarised in table 1.

Table 1.

These expected benefits make SII an increasingly important issue for insurers. Not surprisingly, solvency has evolved into an academic discipline of its own and much of its literature is aimed at the quantitative requirements. Yet, despite the progress made in SII, the next section indicates that insurers will also encounter a number of difficulties and challenges in operational risk before they can utilise these expected benefits.

The importance of operational risk in Solvency II.

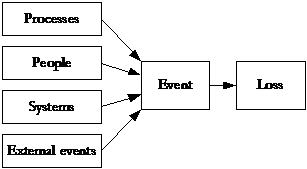

Over the past few decades many insurers have capitalised on the market and have developed new business services for their clients. On the other hand, the operational risk that these insurers face have become more complex, more potentially devastating and more difficult to anticipate. Although operational risk is possibly the largest threat to the solvency of insurers, it is a relatively new risk category for them. It has been identified as a separate risk category in Solvency II. Operational risk is defined as the capital charge for ‘the risk of loss arising from inadequate or failed internal processes, people, systems or external events’. This definition is based on the underlying causes of such risks and seeks to identify why an operational risk loss happened, see figure 2. It also indicates that operational risk losses result from complex and non-linear interactions between risk and business processes.

Figure 2.

Several studies in different countries have attributed insurance company failure to under-reserving, under-pricing, under-supervised delegating of underwriting authority, rapid expansion into unfamiliar markets, reckless management, abuse of reinsurance, shortcomings in internal controls and a lack of segregation of duties. See the examples below. Unbundling operational risk from other risk types in risk management and risk measurement can help prevent future failures. This holds true for smaller and larger losses. Often, larger losses are the cumulative effect of a number of smaller losses. In other words, the result of the bad practices that flourish in excellent economic circumstances, when the main focus is on managing the business rather than operational risks.

Unbundling operational risk from the other types of risk involved in managing and measuring risk may help prevent future failures.

Examples of insurer company failure:

Insurance company failures in which operational risk played a significant role include:

- The near-collapse of Equitable Life Insurance Society in the UK, which resulted from of a culture of manipulation and concealment, where the insurer failed to communicate details of its finances to policyholders or regulators.

- The failure of HIH Insurance, which resulted from the dissemination of false information, money being obtained by false or misleading statements and intentional dishonesty).

- American International Group (AIG) and Marsh, where the CEOs were forced from office following allegations of bid-rigging. Bid rigging, which involves two or more competitors arranging non-competitive bids, is illegal in most countries.

- Delta Lloyd, Fortis ASR and Nationale Nederlanden (the Netherlands) agreed to compensate holders of unit-linked insurance policies for the lack of transparency in the product cost structures.

The above examples illustrate that such losses are not isolated incidents in the insurance industry, but instead occur with some regularity. The large loss events mentioned above can be drilled down into operational risk categories. Table 2 presents several examples of operational risk categories and insurer exposure.

Table 2.

Difficulties and challenges in insurers’ operational risk management.

Insurers have not historically gathered operational risk data across their range of activities. As a result, the major difficulties and challenges that insurers face are closely related to the identification and estimation of the level of exposure to operational risk. A distinction can be made between internal and external loss data, risk self-assessment, supporting techniques, tools and governance. See table 3 for an overview.

Table 3.

Loss data form the basis for measuring operational risk. Although internal loss data are considered the most important source of information, they are generally insufficient because of a lack and the often poor quality of such data. Insurers can overcome these problems by supplementing their internal loss data with external loss data from consortia such as ORX and ORIC. However, using external loss data raises a number of methodological issues, including the problems of reliability, consistency and aggregation. Insurers consequently need to develop documentation and improve the quality of their data and data-gathering techniques.

Risk self-assessment (scenario analysis) can be an extremely useful way to overcome the problems of internal and external loss data. It can be used in situations in which it is impossible to construct a probability distribution, whether for reasons of cost or because of technical difficulties, internal and external data issues, regulatory requirements or the uniqueness of a situation. It also enables insurers to capture risks that relate, for example, to new technology and products as these risks are not likely to be captured by historic loss data. However, current scenario analysis methods are often too complex, not used consistently throughout a group and do not take adequate account of the insurer’s strategic direction, business environment and appetite for risk.

The techniques and tools that insurers use to support risk self-assessments are often ineffective, inefficient and not successfully implemented. Research indicates that 19.5% of current practices are often not shared within the group, while 22% of respondents are dissatisfied and 11% very dissatisfied with the quality of their information technology support services. Another question that can be raised is the governance of risk management. How, for example, are the risk and actuarial departments aligned?

Operational risk may represent the greatest threat to insurers.

Conclusions.

In this article we discussed operational risk in the context of Solvency II. Operational risk is possibly the largest threat to insurers. This is because operational risk losses result from complex and non-linear interactions between risk and business processes. Unbundling operational risk from the other types of risk in risk management and risk measurement can help prevent future failures for insurers. SII is on track to put greater emphasis on the link between risk management and risk measurement of operational risk. We have addressed the most important difficulties and challenges in operational risk management: loss data, risk management, tools, techniques and governance. Those insurers able to ensure an effective response to these major difficulties and challenges are expected to achieve a significant competitive advantage.

Related articles by Zemanta

- Ex-AIG Chief Claims Insurance Giant Cheated Him (abcnews.go.com)

- As A.I.G.’s Losses Grow, Its Survival Options Shrink (nytimes.com)

November 11, 2008

Supporting Operational Risk Management using a Group Support System

Original article written by Dr. Jürgen H. M. van Grinsven & Ir. Henk de Vries.

Dr. Jürgen H. M. van Grinsven is the author of

Improving operational risk management, more info here

INTRODUCTION

Over the past years, various Group Support Systems (GSS) have been used to support Operational Risk Management (ORM) [1]. ORM supports decision-makers to make informed decisions based on a systematic assessment of operational risks [2] [3].

Financial Institutions (FI) often use loss data and expert judgment to estimate their exposure to operational risk [4]. Utilizing expert judgment is usually completed with more than one expert individually, often referred to as individual and self-assessments, or group-wise with more than one expert, often referred

to as group-facilitated self-assessments [5].

While individual self-assessments are currently the leading practice, the trend is more towards group-facilitated self-assessments. There is a need to support these group-facilitated assessments using Group Support Systems. In this article we discuss how Group Support Systems (GSS) can be used to support expert judgment activities to improve ORM. First we describe how a GSS can support multiple expert judgment activities. Secondly a case study will be presented describing the application of a specific GSS, GroupSystems to ORM.

BACKGROUND

Expert judgment is defined as the degree of belief a risk occurs, based on knowledge and experience that an expert makes in responding to certain questions about a subject [6] [7]. Expert judgment is increasingly advocated in FI’s for identifying and estimating the level of uncertainty about Operational Risk (OR) [5]. Moreover, expert judgment can be used to incorporateforward-looking activities in ORM.

Group Support Systems (GSS) can be used for the combined purposes of process improvement and knowledge sharing [8]. GSS can be seen as an electronic technology that supports a common collection of tasks in ORM such as idea generation, organization and communication. GSS aim to improve (collaborative)

group work [9] [10]. Effectiveness and efficiency gains can be achieved by applying GSS to structure multiple experts’ exchange of ideas, opinions and preferences. There are several GSS tools available to support multiple experts in collaborative group work [11]. Examples are: GroupSystems, Facilitate, WebIQ, Meetingworks and Grouputer. Grinsven [5] presents an overview of GSS tools, based on GroupSystems, that can be used for supporting experts in the ORM phases, see Table 1 for examples.

|

ORM phase |

General description |

Examples of GSS Tools |

|

Preparation |

Provides the framework for the experts, taking into account the most important activities prior to the identification, assessment, mitigation, and reporting of operational risks. |

Categorizer Electronic Brainstorming Group Outliner |

|

Risk identification |

Aims to provide a reliable information base to enable an accurate estimation of the frequency and impact of OR in the risk assessment phase. |

Electronic Brainstorming Group Outliner Vote

|

|

Risk assessment |

Aims for an accurate quantification of the frequency of occurrence and the impact |

Alternative Analysis Vote |

|

Risk mitigation |

Aims to mitigate those OR that, after assessment, still have an unacceptable level |

Alternative Analysis Topic Commenter |

|

Reporting |

Aims to provide the stakeholders such as the manager, initiator and experts with the relevant information regarding the ORM exercise. |

Group Outliner |

Table 1: Examples of GSS tools [5]

GSS SUPPORTING ORM: A CASE STUDY

- preparation

- risk identification

- risk assessment

- risk mitigation

- reporting phase

These phases can be viewed as an IPO model (Input, Processing, Output) resulting in an accurate estimate of exposure to OR as final output. In this section we present a case study describing the application of GroupSystems to each phase of the ORM process at a large Dutch FI [5].

The activities of the preparation phase can be divided in the sub activities:

- determine the context and objectives

- identifying, selecting and assess the experts

- choosing the method and tools

- tryout the ORM exercise

- train the experts [12] [5].

The activities of the risk identification phase can be divided in identifying the OR, categorize the OR and perform a gap analysis. One of the objectives of this phase is to arrive at a comprehensive and reliable identification of the OR to reduce the likelihood that an unidentified operational risk becomes a potential threat to the FI. Member status, internal politics, fear of reprisal and groupthink can make the outcome of the risk identification less reliable [5]. Electronic Brainstorming was used to identify the events. Then, the Categorizer was used to define the most important OR. For this, we used a group-facilitated workshop. Using Electronic Brainstorming combined with a Vote tool we supported the experts to perform a gap analysis. In the case study, the experts appreciated the possibility to identify OR events anonymously.

The activities of the risk assessment phase can be divided in the following sub activities:

- assess the OR and

- aggregating the results.

Grinsven [5] advises to ensure the experts assess the OR individually, to minimize inconsistency and bias, also see e.g. [6]. We used the Alternative Analysis tool from Group Systems to enable the experts to assess the OR individually and anonymously. Then, using the Multi Criteria tool, we calculated the results by aggregating the individual expert assessments. The standard deviation function helped us to structure the interactions between the experts. In this interaction the experts provided the rationales behind their assessments. We learned that the GSS tools helped us to prevent the results being influenced by groupthink and the fear of reprisal.

The activities of the risk mitigation phase can be divided in three sub activities: identify alternative control measures, re-assess the residual operational risk and aggregate the results. The methods and tools that can be used in this phase are almost similar to the risk assessment phase. However, a slightly more structured method was used to identify alternative control measures. At the Dutch FI we used the Topic Commenter tool to support this activity. Experts were enabled to elaborate / improve the existing control measures and provided examples for each of them. Then, we used the Alternative Analysis tool to anonymously

re-assess the frequency and impact of the OR. After this, we calculated and aggregated the results using the standard deviation function combined with a group facilitated session.

The activities of the reporting phase can be divided in the sub activities: documenting the results and providing feedback to the experts. Documenting the results needs to follow regulatory reporting standards. This was done by a person of the Dutch FI, using the output results from the GSS as an input for the report.

The intermediate results were presented to the experts immediately after the ORM sessions. Following Grinsven [5], a highly structured process was used to present these results thereby enabling the experts to leverage the experiences gained and to maintain business continuity. We facilitated a manual workshop to provide feedback to the experts. Moreover, at the Dutch FI we made sure the final report complied to the relevant regulatory reporting standards. Future research should investigate applying GSS to provide structured feedbacks.

CONCLUSIONS

Expert judgment is extremely important for ORM when loss data does not provide a sufficient, robust, satisfactory identification and estimation of the FI’s exposure to OR. The case study indicates that a GSS can be used to support expert judgment in every phase of the ORM process. GroupSystems can be used in each ORM phase to support experts in order to achieve more effective, efficient and satisfying results.

GSS can be used to help gathering and processing information about operational risk. Moreover, GSS has the potential to improve the ORM process by minimizing inconsistency and biases, reducing groupthink and gather and processing information. However,more research need to be done to find out which GSS packages besides GroupSystems are suitable to support the ORM process.

FUTURE RESEARCH

The case study indicates that GroupSystems can be used to support multiple experts in the ORM process. However, our case study indicates that research should be done in the possibility of applying GSS in the reporting phase to provide structured feedbacks. Furthermore, concerning the other GSS tools such as Facilitate, WebIQ, Meetingworks and Grouputer, it is yet unknown whether these packages can be used to support expert judgment in ORM. Therefore further research should be done to investigate the possibility of using these GSS packages to support ORM. This would also make comparisons possible.

REFERENCES

[1] Brink, G. J. v. d. (2002). Operational risk:

The new challenge for banks. New York, Palgrave.

[2] Cumming, C. & Hirtle B. (2001). The

challenges of risk management in diversified financial companies, Federal

Reserve Bank of New York Economic Policy Review.

[3] Brink, G. J. v. d. (2003). The implementation

of an advanced measurement approach within Dresdner Bank Group. IIR

Conference Basel II: Best practices in risk management and-measurement,

Amsterdam, Dresdner Bank Group.

[4] Cruz, M. (2002). Modeling, measuring and

hedging operational risk, Wiley Finance.

[5] Grinsven, J.H.M. v. (2007), Improving

operational risk management, Ph.D Dissertation Delft University of Technology, Faculty of Technology Policy and Management, the Netherlands, to be published.

[6] Clemen, R. T. & Winkler, R. L. (1999).

Combining probability distributions from experts in risk analysis. Risk

Analysis 19(2): 187-203.

[7] Cooke, R. M. & Goossens, L.H.J. (2004).

Expert judgement elicitation for risk assessments of critical infrastructures. Journal

of Risk Research 7(6): 643-156.

[8] Kock, N. & Davison, R. (2003), Can lean media

support knowledge sharing? Investigating a hidden advantage of process

improvement. IEEE Transactions on Engineering Management, 50(2), pp.

151-163.

[9] Vogel, D., J. F. Nunamaker, W.B. Martz, R.

Grohowkisk & C. McGoff (1990). Electronic meeting systems experience at IBM.”

Journal of Management InformationSystems 6(3): 25-43.

[10] Nunamaker, J. F., A. R.

Dennis, et al. (1991). “Electronic Meeting Systems to Support Group

Work.” Communications of the ACM 34(7): 40-61.

[11] Austin, T., N. Drakos, and J. Mann, (2006). Web

Conferencing Amplifies Dysfunctional Meeting Practices. Nr. G00138101,

Gartner, Inc.

[12] Goossens, L.H.J. & Cooke, R.M. (2001).

Expert Judgement Elicitation in Risk assessment. Assessment and Management of

Environmental Risks, Kluwer Academic Publishers.

September 29, 2008

Credit crisis bankparade interactive.

Now credit crisis events are spreading to Europe an update of the extensive credit crisis overview was necessary. Follow this link to the guided analysis for an interactive experience or follow this link for a graphical view on the crisis. Investors assume that all problems are credit crisis related. The Fortis problems are however the result of a bad timed take-over of ABNAMRO, bad communication of capital ratio recovery actions and lack of trust in the capability of Fortis to obtain the required investments in time. From an operational perspective the bank claims it is a healthy business still generating revenues. The issues in England are different from those in Belgium and the Netherlands. The housing market in England is also deteriorating and combined with the lack of confidence in banks this has resulted in the take-over of several mortgage lenders. However, prudent banking is still there. Another company that participated in the ABNAMRO take-over has enough cash to roam the financial world for interesting bargains. Santander already showed after the take-over of ABNAMRO it is able to act swiftly, decisively and with success. As part of the take-over it acquired the Brazilian bank Banco Real and the Italian bank Antoveneta. It quickly sold Antoveneta to another Italian bank and now has the power to profit from the opportunities the credit crisis offers. Today it was announced Santander only had to pay 600 million euro for 21 billion euro in deposits and acquired also the branches of Bradford and Bingley. Earlier this year it obtained the European banking assets from General Electric. It shows the big differences between banks that joined the team that bought ABNAMRO one year ago.

September 16, 2008

Merrill take-over by BofA, an informed decision?

Yesterday I expressed my concerns regarding the rational behind the take-over of Merrill Lynch. Today several commentators are wondering why BofA is willing to pay much more than the market value. It seems obvious there is more to it than the public at this moment knows. What is in it for BofA? Maybe there is a reason to share this with BofA’s shareholders? The initial view of analysts is that it probably is a forced deal and I’m inclined to agree with this. One day after the “deal” the is a general concern whether or not BofA can absorb Merrill’s troubles. It is almost impossible to make an informed decision on such a short notice. What guarantees have been given to BofA to take this risky jump in the dark? Another interesting question is whether or not it is possible to manage two take-overs in a period that is causing serious problems for every company in the financial industry.

From a BofA perspective a Lehman take-over would have been much more transparent. Although the risk management of Lehman proved to be inadequate, the management of Lehman in general has a much better track record when you look at business ethics or management integrity.

In the past year Merrill has been accused of many things except high moral standards. I’m really curious how many billion dollar surprises will emerge once BofA really takes over control. Revisiting the credit crisis events show that a reduced level of management integrity proved to be a reliable key risk indicator of corporate failure in difficult economic times. To substantiate this I’ve included a list of Merrill events covering the last 12 months. This list was extracted from the Riskfriends guided analysis. BofA shareholder read and think again!

|

Date |

Event |

Detail |

|

2008-09-14 |

Take over |

Bank of America did take over Merrill although it was |

|

2008-08-21 |

Law suits |

Agreed to buy back $ 12 billion of ARS and pay a fine of $ |

|

2008-08-04 |

Integrity concerns |

Merrill suspected of colluding with UBS manipulating the |

|

2008-07-29 |

Capital ratio recovery |

Planning to raise another $ 8.5 billion |

|

2008-07-29 |

Investment lost |

Lone Star Funds buys CDO’s from |

|

2008-07-23 |

Law suits |

Los Angeles |

|

2008-07-18 |

Capital ratio recovery |

Sold Bloomberg part back to the company for $ 4.4 billion. |

|

2008-07-18 |

Write off |

Totaling $ 9.4 billion, 30 percent CDO, 30 percent credit |

|

2008-07-07 |

Capital ratio recovery |

Selling parts of Bloomberg and Blackrock to raise another $17 |

|

2008-06-26 |

Prediction |

$ 4.2 billion write off predicted for the second quarter |

|

2008-04-18 |

Law suits |

Pension fund CtW investment |

|

2008-04-18 |

Job cuts |

2900 jobs in reaction to write down |

|

2008-04-17 |

Write off |

$9.5 bllion |

|

2008-04-02 |

Capital ratio recovery |

$12.2 billion raised so far |

|

2008-03-18 |

Liquidity issues |

Wachovia states that the exposure of Merrill is 3.3 the |

|

2008-03-11 |

Integrity concerns |

Congress questions Stanley O’neal |

|

2008-03-06 |

Job cuts |

Merrill Lynch said that it would stop making subprime |

|

2008-02-27 |

Investment lost |

Auction rate securities from Merrill |

|

2008-02-26 |

Management leaves |

CEO O’Neal steps down |

|

2008-02-08 |

Investigation |

SEC received request for information from the federal |

|

2008-02-07 |

Integrity concerns |

Accused by Massachusetts of fraud |

|

2008-02-01 |

Investment lost |

Merrill buying back $ 14 million of CDO’s |

|

2008-01-16 |

Write off |

$ 9.8 billion loss, write down $14.1 |

|

2008-01-15 |

Capital ratio recovery |

$ 6.6 billion cash raised issueing |

|

2008-01-14 |

Integrity concerns |

Finra investigating possible |

|

2008-01-11 |

Portfolio deterioration |

$15 billion write down expected |

|

2008-01-04 |

Integrity concerns |

Accused from hiding losses while merger was pending |

|

2007-12-24 |

Capital ratio recovery |

$ 7.5 billion acquired selling shares 13% below market |

|

2007-11-08 |

Integrity concerns |

SEC starts investigation investments |

|

2007-11-07 |

Portfolio deterioration |

Expected write offs 4th quarter another $9.4 according to CreditSights |

|

2007-11-03 |

Integrity concerns |

Merril not aware of |

|

2007-10-31 |

Law suits |

Lawsuits by shareholders |

August 28, 2008

Reporting Key Risk indicators, what to select?

Introduction

In the previous article with regard to the reporting of key risk indicators, the importance of a structured approach towards selection of KRI’s was explained. It also explained why an solid information infrastructure is a prerequisite of a reliable KRI environment. In the midst of the credit crisis it is almost a certainty that regulators in the near future will demand high quality risk reporting environments within financial institutions. An reporting environment that is complete, accurate and close to day-to-day business operations. The underlying KRI production process should be just as transparent as the produced KRI’s. Ratings agencies as well as regulators are expected to demand insight in how the reporting process is controlled just as they require external validation of the risk models in use.

Approach.

In many organizations a modern information infrastructure for KRI reporting will be missing. This doesn’t mean KRI’s cannot be reported. It does mean however that it will cost a lot of effort. It also limits the reporting to Key Risk effects only. Reporting more forward looking metrics like risk causes and control effectiveness as well as putting Key Risk effects into the right business context are simply not do-able when reports are build manually.

The following questions need to be answered before setting up KRI reporting:

- To whom will the KRI’s be reported, the board, product owners or operational managers?

- What are the main objectives at each management level and how specific are these objectives?

- an owner

- an end date

- objective

- upper and lower thresholds

- near real-time measurement

- Strategic

- Financial

- Market share

- Human capital

- Shareholder value

- Sustainability

- Control efficiency

- Tactical

- Financial

- Commercial

- Operational excellence

- Control efficiency

- Operational

- Costs

- Excellence

- Percentage hands free processing

- Control efficiency

- What Key Risk effect information is readily available that can signal impact on objectives and are the metrics and thresholds aligned with each other?

- General provisions

- Operational losses

- Complaints and legal claims

- Regulator fines

- Employee training budget

- Staff turnover

- Accepted risks

- Information quality

- Known high risks

- Software patch backlog

- Change management frequency

- Expected loss frequency impact on operational excellence.

- Expected loss amount impact on RAROC or Economic Capital.

- Expected loss amount impact on efficiency ratio.

- Expected complaint frequency impact on customer attrition.

- Expected number of risks without mitigation impact on control objectives.

- Expected internal fraud frequency impact on control objectives

- Expected change frequency impact on service availability

- Is it possible to transfer the presented Key Risk effect information into owned actions?

- Is it possible to measure the quality of the Key Risk effect information and is the owner willing to provide the requested information for this purpose?

- Try to construct groups of KRI’s which improve the interpretation of presented metrics. For instance construct a KRI group of complaints, claims and losses. These KRI’s are related and trending of the individual KRI’s in most cases should correlate.

- Extract information directly from your core systems. Think of information from systems like the General Ledger, marketing information, product specific operational data, human resource administration etc. The KRI system should not use manually corrected information. When information extracted from core systems is not reliable this is an issue that should be solved and not circumvented. It can help to get these issues on the priority list to make this visible in the KRI reporting.

- Demand that the presented information is not older than one day. This way business can respond quickly to changes in the risk profile and is also able to see quickly whether or not initiated actions have the desired effect. It forces the organization also to implement a fully automated and transparent KRI reporting solution. This reduces the risk of human error or manipulation of information and in the long term also is more cost efficient.

- Request from the information owner a statement of completeness and correctness to get insight in the quality of information. Many KRI’s use information from business supporting processes like financial accounting, complaints and claims, audit, credit and operational loss registration, human resource administration etc. This is information not validated by customers and the quality cannot not always be guaranteed.

- Ensure there is an information delivery contract which makes the information owner responsible for the timely notification of changes in the core systems. This way adjustments in the reporting environment can be planned.

Each management reporting level does have it’s own characteristics. The first difference is the level of aggregation. The board probably only wants a specification of KRI’s aggregated at organization level “board minus one”. Sometimes also a risk type view (like country or compliance risk) or a product view is also desired. To make the information interesting, the Key Risk Effects preferably are expressed as forecasts of relative impact on personal objectives. This way the KRI report is positioned as a tool to manage personal performance contracts too.

Different management responsibilities result in different objectives, although it is a best practice to align strategic, tactical and operational objectives. The level of reporting also impacts the characteristics of the reported KRI’s. At strategic level a KRI should reflect the outcome of different scenario’s. At operational level a YTD metric combined with a year end forecast is probably more than sufficient. Finding specific objectives with owners is not that simple. Some of the required attributes could be missing. An objective should have at least

Referring to performance management and balanced score card approaches you find some type of objectives categorized at the different decision levels:

Find readily available Key Risk effect information that signal impact on one of more of the aforementioned objectives. This information is usually already available in a different context. Some example Key Risk effects are:

Bottom line is that metrics are used that signal a potential direct negative impact on objectives. Some of these KRI’s appear to be backward looking, but can be made forward looking using regression techniques. Ideally there is enough history information available that can be used to reliably forecast end-of-year results. The next thing that needs to be checked is if these metrics are normalized. In other words, does the information from one source match with that of another source. For example: the recording of complaints doesn’t necessarily use the same product categories as for instance the financial accounting or sales force system. Relating KRI’s to objectives is not that difficult provided some alignment is inplace. Some examples:

For reported KRI’s it is a requirement that these KRI’s have business owners. It should be clear who is responsible for managing the KRI. KRI’s at different dimensions (organization, product, distribution channel, customer segment) can have different owners. When building a KRI reporting environment it helps to have a clear understanding how these different dimensions relate to each other. When an organization has a mature performance management environment, this should not be that difficult. In the situation this is lacking this impacts the effort needed to construct the KRI solution. Another thing that requires planning is the functionality needed after the reporting is in place. When KRI’s signal that objectives are at risk, management will demand action to address the risk. This requires people with analytical skills, high quality information and the tools to execute the analysis. In many cases it doesn’t make sense to report KRI’s when it is not possible to analyze the underlying information.

One of the first things management usually wants to know is how reliable the KRI information is. To make sure management doesn’t draw the wrong conclusions the KRI solution needs to be extended. The following quality measures should be considered:

Conclusion.

Creating a professional KRI reporting environment is a demanding task. The main success criteria to succeed are:

- Alignment with performance management preferably at the individual level. This way the KRI-reporting can be positioned as a tool that supports management with managing their objectives (and bonus).

- Top down approach with adequate and persistent backing from senior management.

- The creation of an information value chain that extracts and processes fully automated information from multiple core systems.

- Creative professional analysts familiar with predictive modeling and with access to the information and tooling to analyze and model.

- Consistent and transparent risk and performance reporting at strategic, tactical and operational level to align management of priorities.

January 23, 2008

Reporting of effective Key Risk Indicators

Introduction

A reporting system of key risk indicators must provide current operational risk insight to business management. This insight enables management to control the operation in such a manner that an optimal balance is accomplished between risk effects and the measures that are taken to minimise these risk effects. A newly introduced reporting system will often result in increased risk awareness, where limited awareness was before the introduction of the reporting system.

Key risk effects

Typically businesses focus mostly on key risk effects. Examples of these key risk effects are:

-

Decreasing profit/ increasing costs

-

Higher required value of economic capital

-

Amount of complaints the business received in a given period

-

Audit findings

Reporting of key risk effects is the baseline of operational risk and should also get primary focus in a KRI reporting system. The key risk effects clarify operational results over a given period. They also provide input to any future activities that influence the key risk effect. Future activities can also be invented for the sole purpose of influencing the risk effect. Note that the influence of adequate risk reporting can be at strategic, tactical and operational level. See the following examples:

-

Strategic: poor insight in risk leads to higher allocation of economical capital imposed by the regulator.

-

Tactical: relatively high operational loss on a specific product, may lead to alteration or even cancellation of that product.

-

Operational: an increased amount of external complaints will motivate an operational manager to increase focus on client friendliness.

To ensure all risk effects get the desired focus of management, objectives can be agreed on for these key risk effects. The nature of these objectives will differ per department. For example focus of supporting departments is on costs where sales departments focus on margins. Objectives should be included in personal performance contracts. Management must decide what to reward when defining objectives and related performance contracts. Management could reward positive results and/or negative side effects and/or indicators the results could have been better. For example a manager of a region can be rewarded for realising a target profit of 1.000.000 Euro. But should also be taken into account that the number of complaints of his customers doubled? Should be taken into account that by an operational human error caused by a bad procedure his business lost 200.000 Euro (despite the fact he still realised his profit target)?

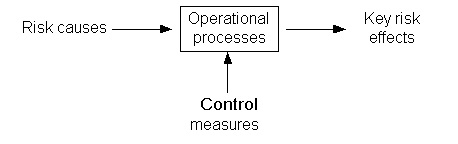

Risk causes

When operational risk effects get the desired attention by the business, it only should be a question of time before the business will look at the operational risk department for help on how to influence these key risk effects. To handle this request, operational risk must bring KRI reporting to the next level. Operational risk needs to report on causes of key risk effects. The easy way to do this would be to work on assumptions. A logical assumption would be that the number of resources in a bank shop influences the service level and therefore the number of complaints at that bank shop. The number of resources is therefore a KRI and should be included in the reporting to management.However, is this influence actually there and if so, to what extent does it actually influence the number of complaints? What is the most efficient value of resources regarding the risk of external complaints? Another, even more complex, question would be what the influence is of the number of resources to other key risk effects? All these questions lead us to the ´hard way´ of answering the question on how to influence the key risk effects. That is by providing KRI´s that have a proven explicit statistical relationship to a key risk effect. When a clear relationship is recognised in the past, we have some reason to believe history repeats and it happens again. ´Some’ reason, since even when we can prove a relationship in the past, we often cannot guarantee it in the future. But still, history does tend to repeat itself and offering estimates is still a whole lot better than offering nothing at all. This is obviously no argument to ignore common sense. When for any reason a statistical relationship is not proven, but common sense dictates there is a relationship, it wouldn’t make sense to exclude the KRI from business reporting.

Control measures

Now the business is provided with current info on key risk effects and its causes. The business will start implementing measures using their brand new information on KRI´s. To control for example the number of available resources, management could decide to increase the number and height of bonuses to stimulate their employees to put in more hours. The key question of course will be ´does this have any effect´. Again the answers must come from statistics. We could assume using common sense that employees do put in more hours when the reward goes up, but are then accepting a very realistic risk this assumption might be completely false. The only assessments on the effectiveness on measures that have any value at all must be based on facts. Therefore the operational data on the measures must be analysed. Only when a statistical relation with a key risk effect is proven, the measure should be included in reporting to management. From that point on the measure should be maintained as an effective control measure for that particular key risk effect.

Gathering data

The described approach focuses on using statistics to prove and define relationships. This approach only works with a certain volume of facts, so it will not suit the smallest companies that do only have small volumes of operational data. For the larger companies it will only work when the company recognises the need to record a broad range of operational facts in e.g. a data warehouse. To really gain knowledge on what causes the most significant risk effects one must be prepare to start gathering data. Data must be gathered on all possible relevant domains. This without knowing and specifying exactly what information are (assumed) causes to risk effects. This approach allows analysts to think out of the box and use their statistical techniques in unexplored territories. They might just discover sales drops are influenced mostly by the weather….

Summary

To summarise, the key elements for a successful KRI implementation are:

-

To increase focus on operational risk of management by including key risk effects in their performance contracts.

-

The basis of reporting must be set in accurate current reporting of the key risk effects.

-

As a result of statistical analysis, indicators/ causes of the key risk effects are included in reporting.

-

Business management implements control measures to influence the key risk effects. Reporting must include the effectiveness of these control measures.

-

Finally, to make all this possible the business must be prepared to invest in the recording of a broad range of operational data.

![Reblog this post [with Zemanta]](https://i0.wp.com/img.zemanta.com/reblog_e.png)

You must be logged in to post a comment.